Agentic AI in Production: Governance, ROI, and Failure Modes

Agentic AI moves fast into production, but without governance it introduces risk and erodes ROI. Learn what separates successful deployments from costly experiments.

Introduction

Agentic AI is moving fast from experimental labs into real production environments. Autonomous systems that can reason, plan, and act across tools promise efficiency gains that traditional automation never delivered.

Yet behind the hype, many production deployments quietly fail.

Not because it lacks capability, but because it is deployed without the operational structures required to govern decision-making systems. Most teams underestimate what changes when software is allowed to act autonomously.

This article explains why agentic AI succeeds in some organizations, fails in others, and why governance is the missing layer that determines return on investment.

What Agentic AI Means in a Production Context

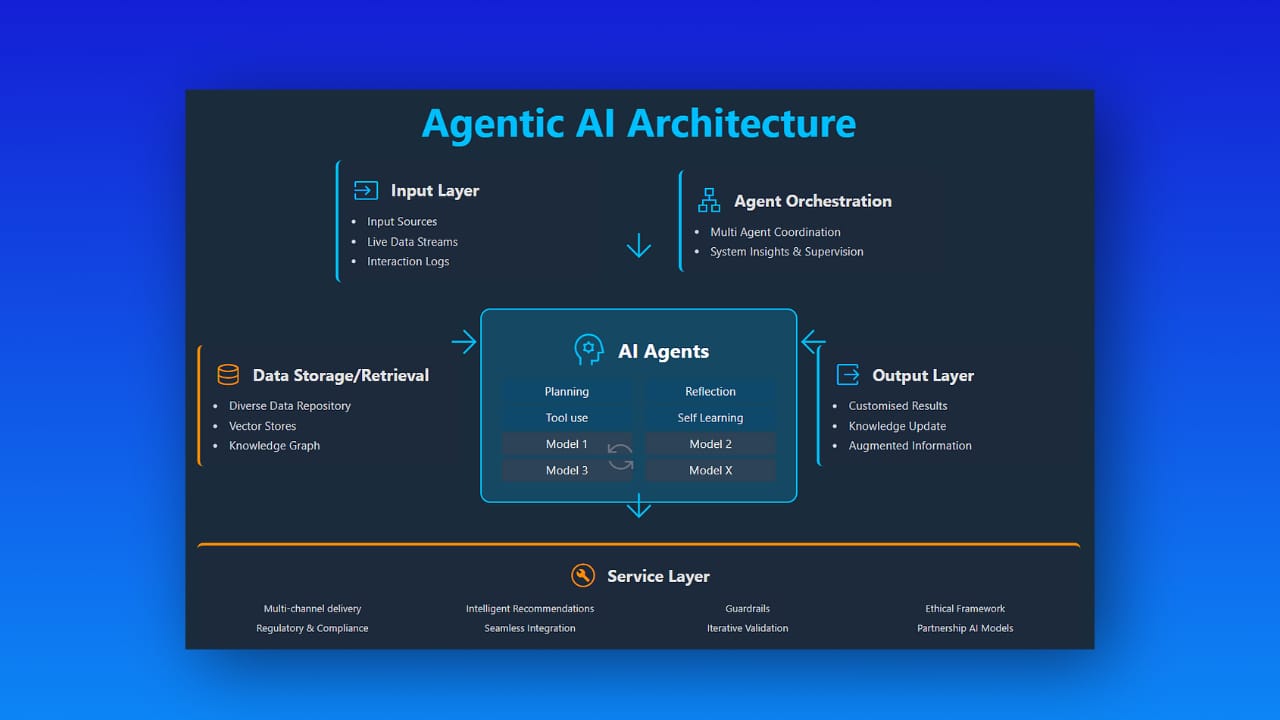

In production, it is not just automation with a language model attached. It is a system that:

- Interprets context from data, APIs, and user input

- Evaluates multiple possible actions

- Selects and executes actions based on goals rather than scripts

Once an agent can modify data, trigger workflows, or interact with customers, it becomes part of the operational control layer of the organization.

At that point, traditional assumptions about automation no longer apply.

When Agentic AI Actually Works

It can deliver measurable value, but only when deployed under strict structural conditions.

Clearly bounded problem domains

Successful implementations operate in environments where the scope is deliberately constrained. Objectives are well defined, the set of allowed actions is limited, and failure conditions are known in advance.

Examples include internal workflow orchestration, decision support systems, and controlled operational processes. Open-ended autonomy almost always leads to instability.

Explicit decision authority

Production-ready systems define decision boundaries upfront. Teams explicitly determine which actions an agent may execute independently, which actions require human approval, and which actions are prohibited entirely.

These limits are enforced at the system and infrastructure level. Prompt instructions alone are insufficient to control autonomous behavior.

Observability by design

Every reliable agentic system is observable. Teams can inspect inputs, understand decision paths, and reconstruct outcomes after the fact. Without this visibility, production incidents become impossible to debug or explain.

Operational Failure Modes in Agentic AI

Most failures do not look like system crashes. They look like slow degradation.

Behavioral drift

Agent behavior changes over time due to model updates, prompt revisions, or shifts in upstream data. Outputs remain plausible, which delays detection until downstream metrics or customer impact reveal the problem.

Toolchain amplification

Agentic systems often integrate with CRMs, billing platforms, internal APIs, and messaging tools. A single incorrect assumption can propagate across multiple systems. Unlike rule-based automation, these failures appear reasonable, which makes them harder to detect and reverse.

Ownership ambiguity

When incidents occur, teams struggle to identify accountability. The failure could originate in the model, orchestration logic, data source, or governance layer. Without clear ownership, remediation slows and confidence erodes.

Legal and Compliance Risks

Many agentic AI projects fail during legal or compliance review rather than technical testing.

Lack of decision traceability

Regulatory requirements increasingly demand explainability and auditability. Logging outputs alone is insufficient. Organizations must be able to demonstrate how a decision was reached and which data influenced it.

Excessive data access

Agents are often granted broad permissions for convenience. This increases the risk of unintended data processing, violates data minimization principles, and complicates compliance audits.

Weak human oversight

Human-in-the-loop is frequently claimed but rarely enforced. Reviews are superficial, overrides are uncommon, and agents operate unchecked under time pressure. From a compliance perspective, this is not oversight but uncontrolled delegation.

Why ROI Often Fails to Materialize

Many organizations deploy agentic AI expecting immediate efficiency gains. Instead, costs quietly accumulate.

Without governance, teams absorb hidden overhead:

- Manual correction of agent mistakes

- Incident response and cleanup

- Customer support escalation

- Emergency rollbacks

As usage scales, even small error rates become systemic. Trust declines internally, adoption stalls, and the system never transitions from experiment to infrastructure.

ROI does not fail because agentic AI lacks value. It fails because the surrounding system cannot support autonomous decision-making.

What a Production Governance Layer Requires

Governance is not documentation or policy. It is executable control.

A production-grade governance layer includes:

- Runtime enforcement of action boundaries

- Permission-based access to tools and data

- Full decision logging and replayability

- Kill switches and safe fallback mechanisms

- Continuous monitoring for drift and anomalous behavior

Without these elements, agentic AI remains experimental software operating in production environments.

Need help implementing agentic AI in production?

Designing agentic AI systems that actually work in production requires more than model selection or prompt tuning. It demands a solid architecture for governance, observability, and risk control from day one.

Scalevise helps organizations design and implement production-ready agentic AI systems that:

- operate within clear decision boundaries

- remain auditable and compliant

- deliver measurable ROI instead of experimental overhead

We support teams with architecture design, governance frameworks, and hands-on implementation, tailored to your operational and regulatory context.

If you are exploring agentic AI beyond experiments and want to deploy it responsibly in production, we can help you move forward with confidence.

Schedule a conversation below to discuss your use case and constraints.

Strategic Takeaway

Agentic AI does not fail because the technology is immature. It fails because it is deployed without the rigor applied to any system with operational authority.

Organizations that succeed treat agentic AI as governed infrastructure. They design for accountability, auditability, and control from the first deployment.

The competitive advantage will not come from deploying more agents. It will come from deploying agents that can be trusted in production.