Are ChatGPT Agents Safe? What You Need to Know About Security & Browser Sessions

ChatGPT Agents are redefining what AI assistants can do. But with great power comes great responsibility—and growing security concerns. In this article, we dive deep into whether ChatGPT Agents are safe to use, how browser sessions are managed, and what risks businesses and developers need to be aware of.

Why Security Matters for AI Agents

AI agents today are more than chatbots. They can take action, access files, browse the web, and even schedule meetings. That’s why understanding their security posture is critical.

If compromised, these agents could:

- Act on behalf of logged-in users

- Read sensitive documents

- Expose access tokens

- Trigger unwanted actions like emails, deletions, or API calls

Especially with browser-based agents, the risk increases.

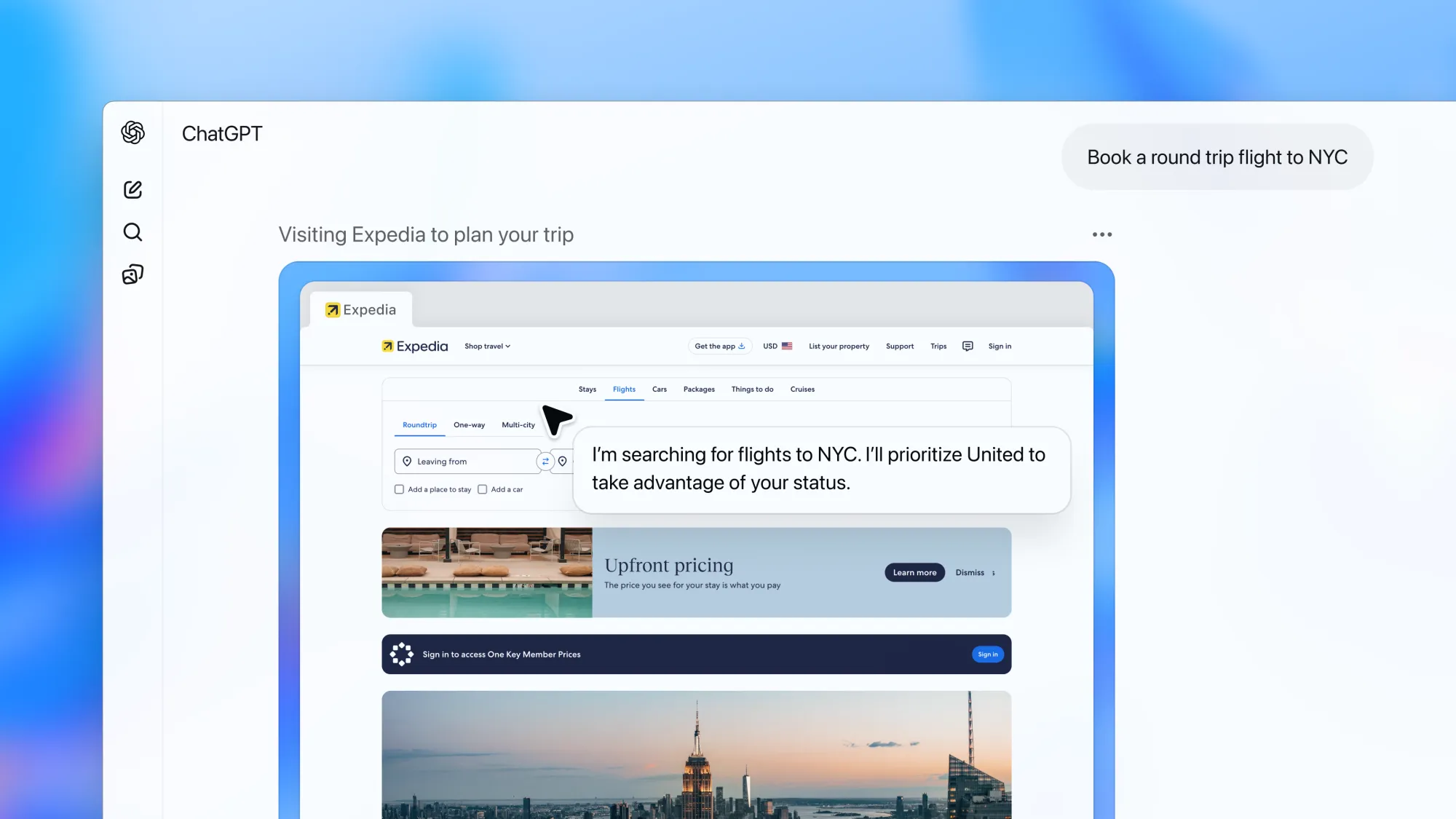

ChatGPT Agent Browser Sessions: How Do They Work?

ChatGPT Agents can operate inside your active browser session. That means:

- They can simulate actions in the same context you're logged into

- They might reuse your cookies and access tabs or iframes

- They potentially see everything a user sees

This raises red flags in terms of:

- Session hijacking

- Token leakage

- Cross-site vulnerability exposure

It also creates ambiguity: is the agent running with your identity, or its own isolated sandbox?

Common Security Questions

Are ChatGPT Agents sandboxed?

Not in the traditional sense. While OpenAI limits system-level access, browser agents are not fully isolated. This means browser-based agents might operate in an authenticated session context, increasing the risk surface.

Can Agents access sensitive browser data?

If the agent is operating in an active session, yes, they potentially can interact with authenticated interfaces and see what a user can. They are not manually typing in passwords—but if you're already logged in, they don’t need to.

Is Chrome-based agent execution secure?

Chrome extension-based execution introduces new attack vectors, especially if:

- Third-party agents are installed

- Users are unaware of scope or permissions

- Devs don’t set strict sandbox rules

This is especially risky in enterprise environments where login sessions include email, CRMs, financial platforms, and source code systems.

Red Team Scenario: What Could Go Wrong?

Let’s say a user has ChatGPT Agents enabled and is logged into their company CRM and Google Workspace. If a malicious agent gets access:

- It could read internal emails

- Export CRM data

- Schedule phishing meetings

- Trigger automated workflows via existing integrations

This isn’t hypothetical. This is real attack surface.

What Security Measures Exist?

OpenAI has taken steps to limit agent risk:

- Agents are approved via an internal moderation process

- There’s no direct file system access (outside the tools you allow)

- Logs are monitored for abuse

However:

- Browser execution remains a grey zone

- There is no fine-grained permission management

- OAuth scopes are not transparent enough for most users

What You Can Do to Stay Safe

If you're planning to use ChatGPT Agents in your team, follow these practices:

- Disable Agents in Chrome for high-risk accounts

- Avoid using Agents in authenticated sessions

- Use agent previews in private browser windows

- Regularly review granted permissions

- Don’t trust third-party agents unless vetted

Our Verdict: Use with Caution

ChatGPT Agents are powerful—but still immature in their security model. Until OpenAI provides:

- Full session isolation

- Transparent permission systems

- Agent trust scores and audits

...you should approach them with zero-trust principles.

They are not yet enterprise-safe by default.

Bonus: What the Dev.to Community Is Saying

“I want to love ChatGPT Agents, but I don’t trust them in a logged-in browser session yet.”

— Senior Developer on Dev.to

“Until we have scoped permissions, I consider this feature alpha.”

— CTO in cybersecurity startup

Final Thoughts

ChatGPT Agents are a leap forward but so were cloud VMs before people secured them.

Be early. Be curious. But don’t be reckless.

Need Help Securing Your AI Stack?

Scalevise helps teams integrate AI tools securely, including agent vetting, custom sandboxing, and secure API workflows.

→ Book an AI Security Audit

Try the Sales Agent Now