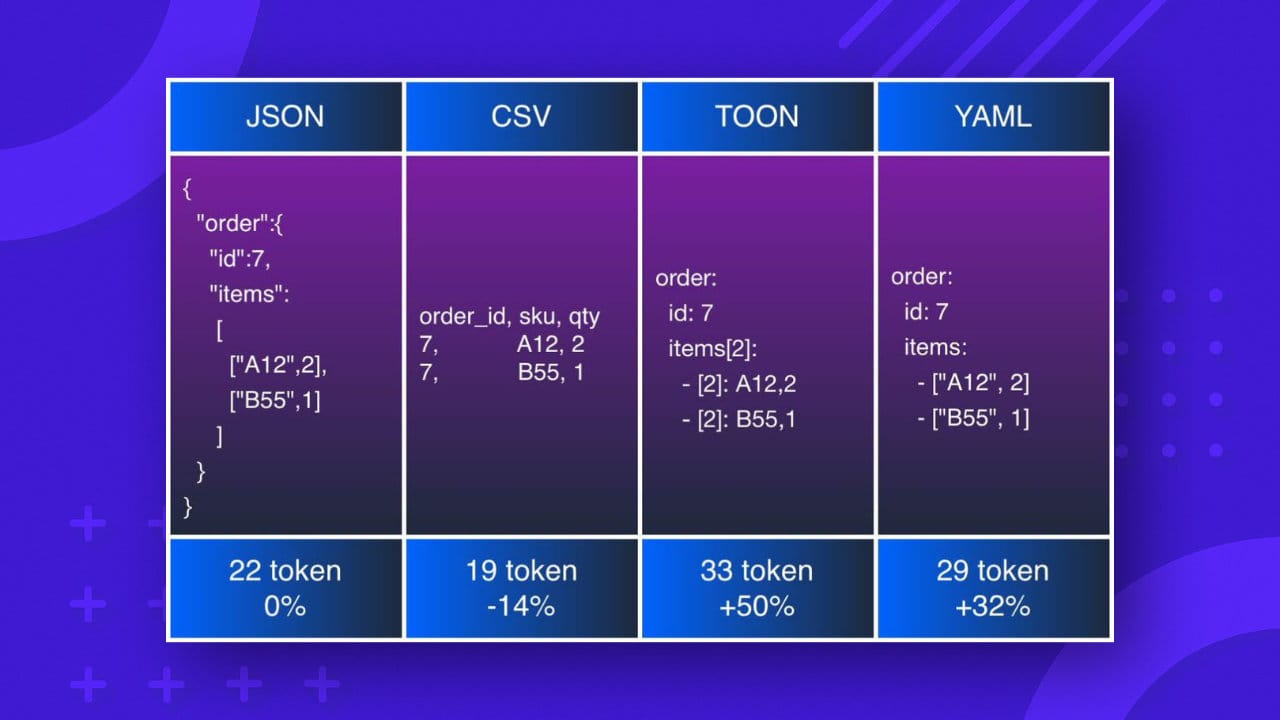

Why JSON Slows Down LLMs and Why TOON and YAML Are Better Data Formats for AI

JSON was never designed for large language models. This article explains why JSON becomes a performance bottleneck in LLM context windows and how lightweight formats like TOON and YAML improve token efficiency, speed, and API cost control.

JSON has quietly become one of the biggest performance bottlenecks in modern AI systems. Not because it is broken, but because it was never designed for large language models. As LLM usage scales and context windows become more valuable, teams are starting to ask the right question: why are we still feeding models a format optimized for humans and machines, but not for tokens?

This article explains why it performs poorly in LLM environments, how token efficiency actually works, and why lightweight formats like TOON are emerging as a practical alternative.

Also See: JSON to TOON Converter

LLMs Do Not Read Files, They Process Tokens

Large language models do not interpret data structures. They process tokenized text. Every character you send is broken down into tokens, and every token consumes budget, time, and context capacity.

JSON looks compact to developers, but tokenizers see something very different. Quotation marks, braces, commas, repeated keys, and deep nesting all inflate token counts without adding semantic value. The model does not gain more understanding from the structure. It only pays the cost.

This creates a structural mismatch. JSON is optimized for interoperability and strict validation. LLMs are optimized for probabilistic reasoning over dense semantic input. When these two collide, efficiency suffers.

Token Efficiency Is About Semantic Density, Not Compression

Token efficiency is often misunderstood. It is not about compressing data or encoding tricks. It is about maximizing meaning per token.

A token-efficient format minimizes repetition, reduces syntactic noise, and relies on implicit structure rather than explicit ceremony. Once a model understands the pattern, repeating it thousands of times is wasteful.

JSON violates this principle by design. Every object restates its structure. Every key is repeated. Every value is wrapped in syntax the model does not need. At small scale this is tolerable. At production scale it becomes expensive.

Why JSON Becomes a Scaling Problem for AI Systems

The cost impact compounds quickly. Larger prompts increase inference latency. API usage becomes harder to predict. Context windows fill up before reasoning even begins. Truncation strategies kick in and silently remove important instructions or data.

This is especially painful in retrieval augmented generation, agent memory systems, multi step reasoning pipelines, and long running conversations. Teams often try to fix the symptoms by switching models or increasing context limits, but the underlying inefficiency remains.

JSON is not just neutral in these scenarios. It actively competes with reasoning for space.

YAML Is Better, But Only Marginally

YAML is often suggested as a more AI friendly alternative. It removes some syntactic overhead and improves human readability. In practice, it is slightly more token efficient than JSON.

However, YAML still repeats keys, still relies on structural markers, and still introduces ambiguity that requires additional tokens to resolve. For small prompts the difference is negligible. For large context windows it helps, but it does not change the fundamentals.

YAML is an incremental improvement. It is not a structural solution.

The Case for Lightweight Formats in LLM Context Windows

Lightweight formats start from a different assumption. The consumer is not a strict parser or a human reader. The consumer is a language model that can infer structure, patterns, and relationships without explicit syntax.

By removing repeated keys, minimizing separators, and flattening predictable structures, lightweight formats dramatically increase semantic density. More meaning fits into fewer tokens. Reasoning gets more room. Costs drop. Latency improves.

TOON as a JSON Alternative for LLMs

TOON is designed specifically for this gap. It is not meant to replace JSON everywhere. It is meant to replace JSON inside LLM context windows.

Instead of repeating keys, TOON establishes structure once and relies on positional consistency. Instead of verbose syntax, it uses minimal markers that tokenize efficiently. Instead of rigid schemas, it embraces predictability and inference.

For LLMs, this is a natural fit. Models are exceptionally good at understanding ordered, patterned input. TOON leans into that strength rather than fighting it.

The result is faster prompts, lower API costs, and more reliable outputs under context pressure.

When You Should and Should Not Use Lightweight Formats

Lightweight formats are not a universal replacement. It remains the right choice for APIs, storage, validation, and interoperability. TOON and similar formats belong at the AI boundary, where data is prepared specifically for model consumption.

If the data is entering an LLM context window, token efficiency matters. If it is not, JSON is still fine.

The mistake is using the same format everywhere without questioning its cost.

The Future of Data Formats for AI

As LLMs move deeper into production systems, data formats will evolve. We will see more AI first representations that optimize for reasoning, cost, and speed rather than strict machine parsing.

JSON will not disappear. But it will stop being the default for AI prompts.

The teams that recognize this early will ship faster, cheaper, and more reliable AI systems.