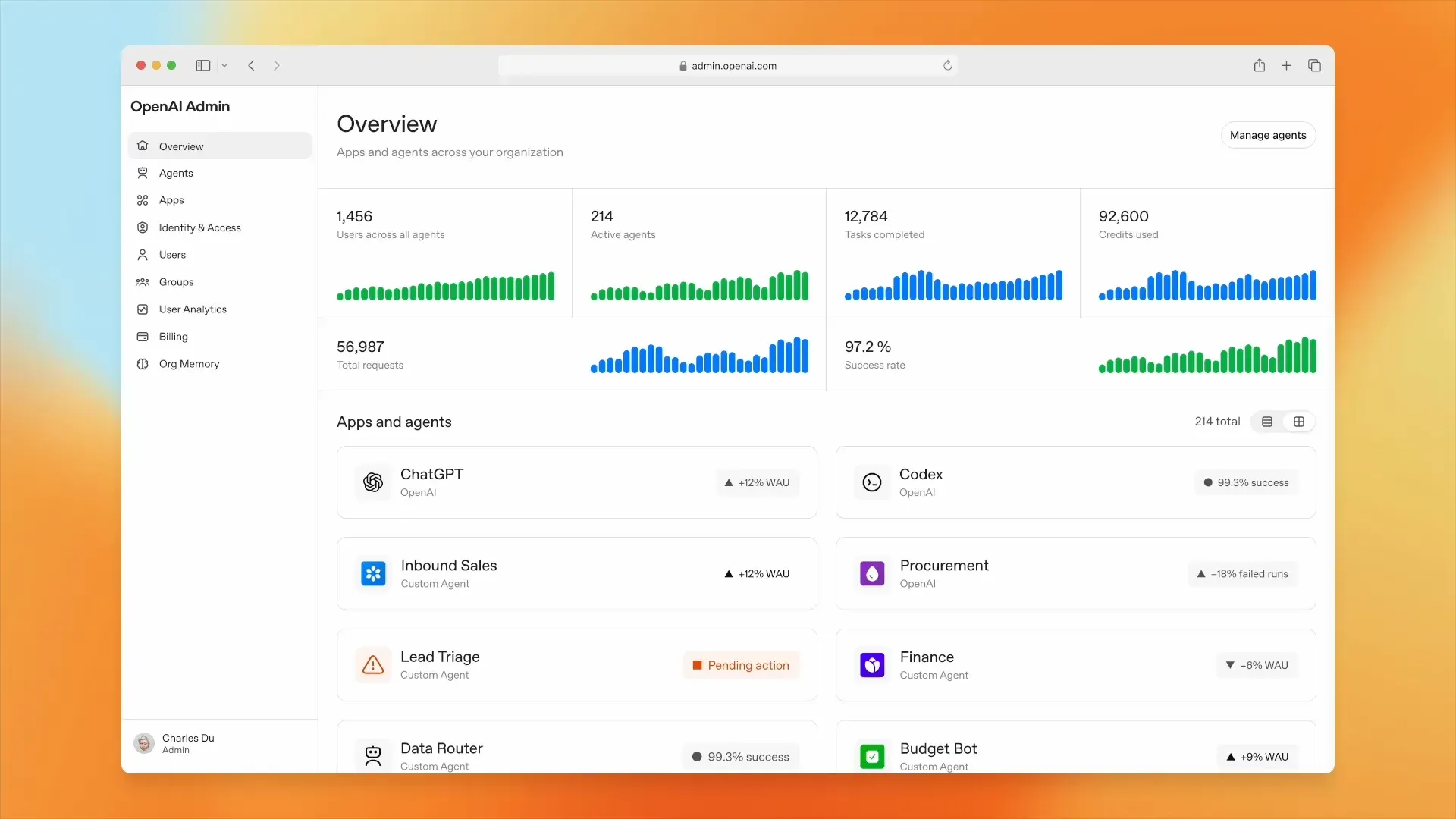

OpenAI Frontier Features: Enterprise-Ready AI Agent Capabilities

A deep dive into the real capabilities inside OpenAI Frontier and what makes it suitable for secure, scalable enterprise AI agent deployment.

OpenAI Frontier has quickly become one of the most discussed developments in enterprise AI. While much of the conversation focuses on its broader vision, the real value lies beneath the surface. What does the platform actually allow companies to do? Which capabilities make it suitable for real production environments rather than controlled demos? In this article, we move past positioning and explore the concrete functionalities inside Frontier that make large scale AI agent deployment realistic, secure, and operationally viable.

This article focuses purely on the strongest functional capabilities inside OpenAI Frontier and why they matter from an architectural and operational perspective.

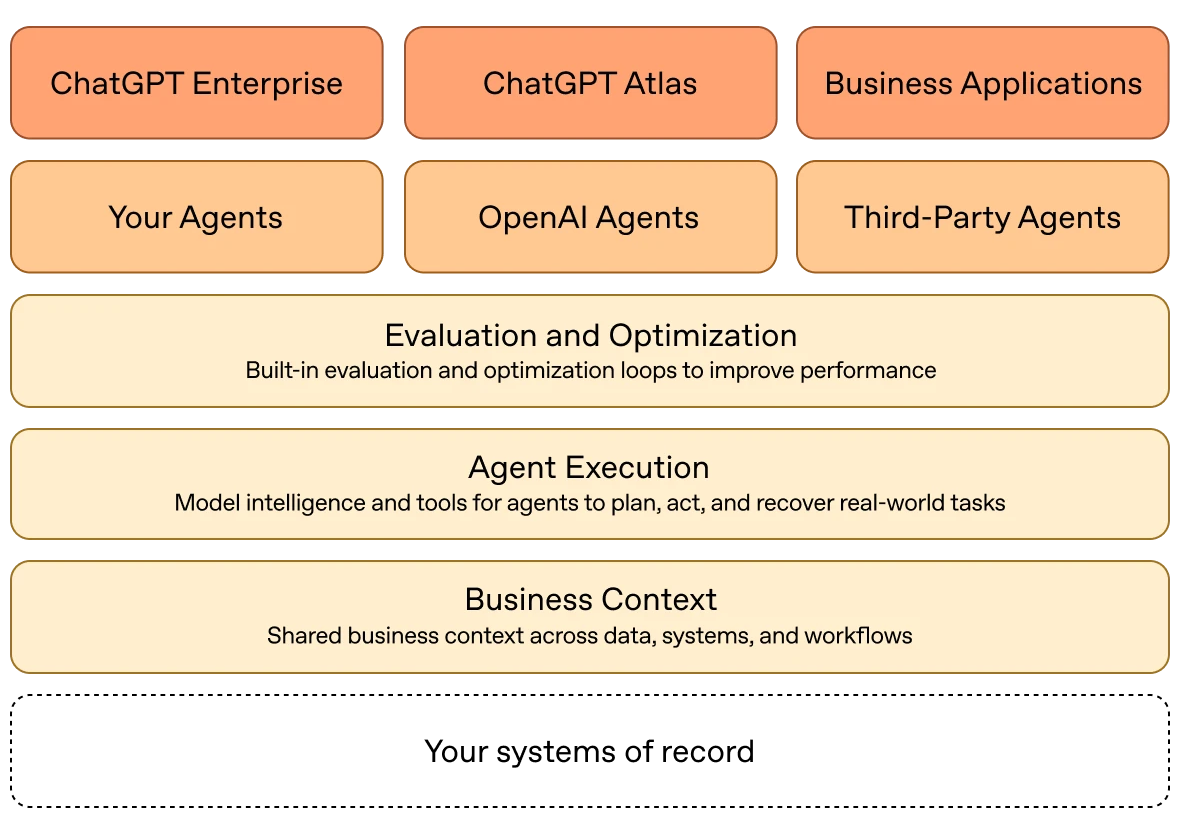

Tool Execution as a Controlled Action Layer

The most important capability inside Frontier is structured tool usage.

Frontier agents are not limited to generating responses. They can execute defined actions within a controlled environment. That means calling internal APIs, triggering workflows, querying data sources, and interacting with enterprise systems.

The key distinction is control.

Actions are not free-form. They are executed through defined tools with scoped permissions. This creates an execution boundary that makes AI operationally safe inside real environments.

For enterprises, this shifts AI from advisory output to task execution infrastructure.

Frontier example of Inbound Sales

Persistent Organizational Context

Traditional AI systems operate statelessly. Frontier changes that by allowing persistent contextual grounding.

Agents can operate with awareness of company documentation, process structures, historical interactions, structured datasets, and workflow state.

This makes a major difference in real deployments.

- A support agent can track ticket progression across time.

- A sales agent can understand pipeline evolution.

- An engineering agent can interpret repository history and prior decisions.

Persistent context transforms AI from reactive conversation into embedded operational intelligence.

Permission-Scoped Access Control

Security is not an add-on in Frontier. It is structural.

Agents operate under defined permission layers. Access can be restricted by role. Tool usage can be scoped. Actions can be logged and audited.

This matters because AI agents executing real actions must align with enterprise identity and access management systems.

Without permission boundaries, deployment risk is unacceptable.

Frontier enables AI to function inside compliance-driven organizations without bypassing governance frameworks.

Built-In Evaluation and Feedback Loops

One of the most powerful yet under-discussed functionalities in Frontier is structured evaluation.

Agents are not static deployments. They can be measured, evaluated, and improved through feedback cycles.

Instead of guessing whether an AI system performs well, organizations can track output quality and reliability over time.

This introduces operational discipline. AI agents can be refined based on measurable performance rather than anecdotal impressions.

In enterprise environments, this is what separates experimentation from scalable deployment.

Multi-Step Task Orchestration

Frontier supports structured multi-step reasoning combined with tool execution.

Agents can break down complex tasks into intermediate stages, execute sequential actions, validate outputs, and adapt decisions based on results.

This makes advanced use cases viable for:

- Complex report generation from multiple data sources

- Cross-system compliance validation

- Technical incident analysis across logs and dashboards

- Data reconciliation workflows

The orchestration layer enables AI to operate across processes rather than inside isolated prompts.

Observability and Traceability

Frontier allows organizations to inspect what an agent did, which tools were used, what outputs were generated, and where failures occurred.

This is essential for debugging, governance, and cost control. Black-box AI does not scale inside enterprise infrastructure. Traceable AI does.

Frontier's transparency layer is therefore not cosmetic. It is foundational for production deployment.

Structured Deployment Lifecycle

Frontier supports controlled iteration. Agents can be versioned, refined, evaluated, and redeployed in structured cycles. This aligns AI agent management with modern DevOps principles.

Instead of rebuilding systems from scratch, teams can incrementally improve them. This lifecycle support reduces risk and enables progressive scaling. It also prevents the fragmentation that typically occurs when AI pilots are built in isolation.

Controlled Autonomy Gradients

Another strong functional capability is adjustable autonomy. Organizations can deploy agents in different operational modes ranging from suggestion-only environments to fully autonomous workflows within defined boundaries.

Enterprises rarely move directly to full autonomy. Frontier allows gradual trust building.

Enterprise Integration Architecture

Frontier is designed to integrate into existing stacks rather than replace them.

Agents can connect with CRM systems, internal dashboards, knowledge repositories, ticketing platforms, and custom middleware. The critical factor is structured interoperability. AI becomes part of the system architecture instead of operating as a detached overlay.

This reduces shadow AI usage and supports centralized governance.

Final Assessment

OpenAI Frontier is not just a branding layer around large language models. Its real strength lies in functional architecture.

- Controlled tool execution

- Permission-scoped access

- Persistent context

- Structured evaluation

- Lifecycle management

These are the capabilities that make enterprise AI deployment viable.

Organizations that understand and leverage these features will move beyond pilots and into sustainable AI operations.