Orq.ai: The Missing Control Layer for Production-Grade LLM Systems

Orq.ai is an LLM operations and governance platform designed for teams running AI in production. This article explains what Orq does, why it matters, and which real-world engineering problems it solves around prompts, observability, cost control, and compliance.

Large Language Models have moved rapidly from experimental demos to business critical infrastructure. What once started as a single API call is now embedded in customer support systems, internal tooling, analytics pipelines, and decision making workflows.

With this shift comes a reality many engineering teams are now facing: LLMs are easy to integrate, but extremely difficult to operate reliably at scale.

This is where Orq.ai comes in.

Orq is not another LLM provider and it is not a thin SDK wrapper. It is an LLM operations and governance platform built to help teams design, deploy, observe, and control LLM powered systems in production. As organizations mature in their AI usage, Orq is emerging as a critical control layer between experimentation and enterprise grade AI infrastructure.

This article explains what Orq is, why it is becoming increasingly relevant, and which concrete engineering challenges it helps solve.

What Is Orq.ai

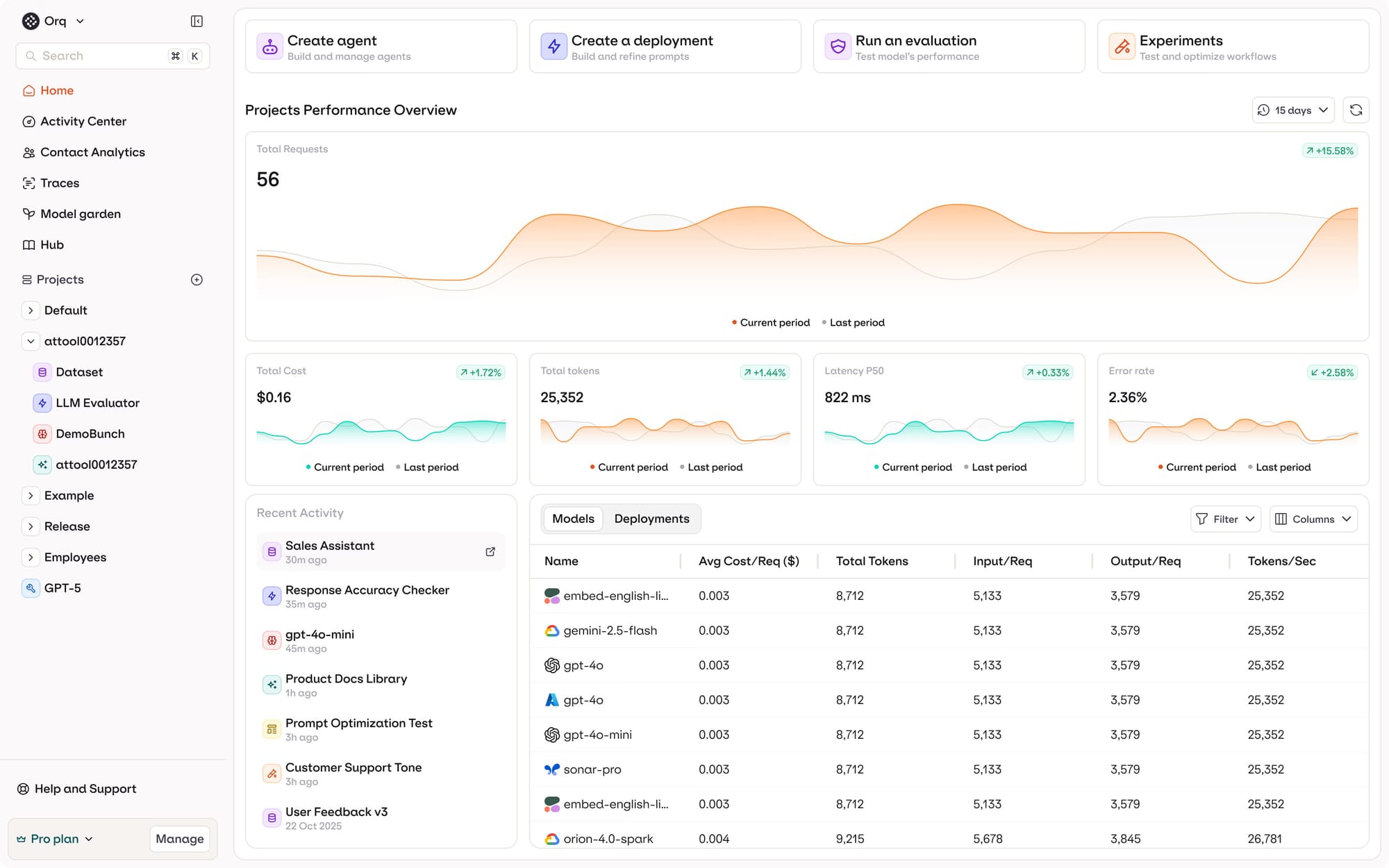

Orq.ai is a platform that sits between your application and one or more foundation models such as OpenAI, Anthropic, or open source LLMs.

Instead of hard coding prompts and model logic directly into application code, Orq introduces a structured abstraction layer where prompts, models, evaluations, and policies are centrally managed.

This allows teams to treat LLM interactions as managed assets rather than ad hoc API calls. Prompts become versioned, observable, and auditable. Model behavior becomes measurable. Costs become traceable.

In practical terms, Orq turns LLM usage into infrastructure.

Why LLM Systems Break Down in Production

Most LLM projects fail not because the models are weak, but because the operational setup does not scale.

Common patterns include:

- Prompts scattered across services with no ownership

- Silent prompt changes pushed directly to production

- No reliable way to test prompt updates

- Limited visibility into output quality over time

- Rapidly increasing inference costs

- Growing pressure from security and compliance teams

Traditional monitoring tools do not solve this. From a system perspective, everything still works. From a business perspective, quality erodes.

This gap between technical uptime and functional reliability is exactly what Orq is designed to address.

Core Capabilities of Orq

Prompt Management and Version Control

Orq treats prompts as first class artifacts. Each prompt can be versioned, reviewed, tested, and rolled back.

This removes a major risk factor in LLM systems: uncontrolled prompt drift. Engineers no longer need to guess which prompt version caused a regression or manually track changes across repositories.

For teams working across environments, this alone can prevent costly production incidents.

Experimentation and Evaluation

Orq enables structured experimentation across prompts and models.

Engineers can compare different prompt versions or model providers using real datasets, synthetic inputs, or predefined test cases. Output quality, latency, and cost can be evaluated side by side.

This turns prompt engineering into an evidence driven process instead of subjective trial and error.

Observability and Monitoring

Once deployed, Orq provides deep visibility into how LLM systems behave in production.

Teams can monitor:

- Prompt usage and frequency

- Model performance trends

- Latency and failure patterns

- Output characteristics

- Token usage and cost drivers

This is critical for detecting subtle regressions early. LLM systems rarely fail loudly. They degrade quietly. Orq makes those changes visible.

Governance, Security, and Compliance

As LLMs become part of core business workflows, governance becomes unavoidable.

Orq introduces centralized controls such as:

- Access management for prompts and models

- Audit logs for changes and usage

- Policy enforcement across environments

- Data handling and compliance safeguards

This allows organizations to scale LLM usage without losing oversight or exposing themselves to regulatory risk.

Why Engineers Find Orq Compelling

From an engineering standpoint, Orq solves problems that teams are otherwise forced to patch together manually.

It reduces cognitive overhead by abstracting away prompt storage, experimentation, monitoring, and governance into a single platform. Engineers can focus on building product logic instead of maintaining internal tooling.

It also supports model agnostic architectures. Teams are not locked into one provider. Switching models, routing requests, or running comparisons becomes feasible without large refactors.

Most importantly, Orq acknowledges that LLM systems are not just technical systems. They are socio technical systems involving engineering, product, legal, and operations. Orq gives these stakeholders a shared source of truth.

Engineering Pain Points Orq Solves

Prompt Drift and Regression

Model updates, small prompt edits, or changes in input distribution can all affect output quality. Without structured tooling, these regressions are almost impossible to trace.

Orq introduces versioning, testing, and observability that make drift measurable and manageable.

Debugging Non Deterministic Behavior

When an LLM produces an incorrect or inconsistent output, the root cause is rarely obvious.

Is it the prompt, the model, the temperature setting, or the context length?

Orq provides the contextual data engineers need to debug these issues systematically rather than relying on guesswork.

Cost Transparency and Optimization

LLM costs are driven by token usage, prompt length, retries, and model choice. These costs often increase before teams realize where the problem lies.

Orq surfaces cost drivers at a granular level, enabling targeted optimization instead of blunt cost cutting.

Cross Team Alignment

Without a shared platform, LLM changes often happen in silos. Engineering optimizes for performance, product focuses on output quality, compliance worries about risk.

Orq creates a shared operational layer that aligns these perspectives and speeds up decision making.

Where Orq Is Not a Silver Bullet

Orq does not magically fix hallucinations. It does not replace domain expertise or product thinking. It does not write good prompts for you.

Teams still need clear use cases, evaluation criteria, and human oversight.

What Orq does provide is the infrastructure that makes responsible, scalable LLM usage possible.

Why Orq Signals a Market Shift

The rise of Orq reflects a broader transition in the AI landscape.

The question is no longer whether LLMs can be built. The question is whether they can be operated reliably, securely, and economically at scale.

As LLMs move deeper into core business processes, platforms that focus on control, observability, and governance will define the next phase of AI adoption.

Orq is not interesting because it is flashy. It is interesting because it solves the unglamorous problems that determine whether AI systems succeed or fail in the real world.