Cut Your API Costs in Half with TOON: How We Reduced Token Usage by 50%

We reduced token usage and API costs by over 50% by replacing JSON with TOON, a compact format built for LLM efficiency. Learn how TOON simplifies structure, speeds up processing, and halves your OpenAI API expenses.

At Scalevise, we handle thousands of API requests daily across ChatGPT, Claude, and other large language model platforms. Every token counts because every token costs money.

When we noticed that a large portion of our API spend wasn’t tied to actual content but to data structure overhead, we began looking deeper. JSON, the web’s default data format, turned out to be a silent cost amplifier. Brackets, commas, and quotes may seem trivial, but at scale, they add up quickly.

That’s when we decided to build our own optimized structure: TOON.

What Is TOON?

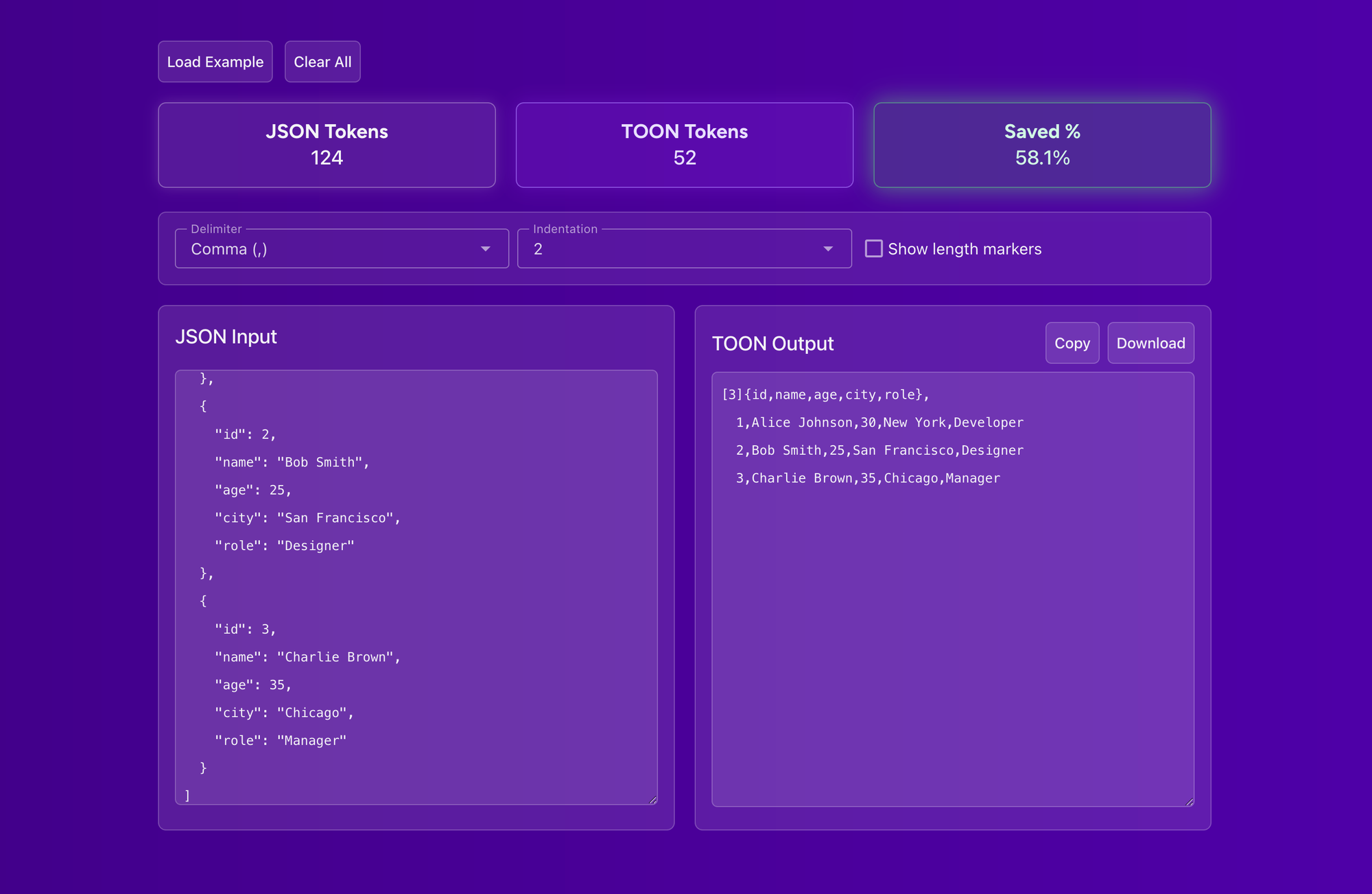

TOON is a lean, structured, human-readable format derived from JSON, designed specifically for use with language models. It maintains the same logical structure as JSON but eliminates unnecessary syntax. No redundant punctuation, no wasted space just pure, model-friendly data.

Instead of using characters that add nothing to meaning, TOON focuses on semantic clarity. The result is smaller payloads, faster processing, and lower token counts.

Think of it as JSON re-imagined for AI efficiency.

How We Cut Token Usage by 50%

We benchmarked TOON against JSON across three major APIs: ChatGPT, Claude, and Gemini.

Each test used the exact same dataset, content length, and context window the only change was the input format.

The results were dramatic:

| API | JSON Input | TOON Input | Reduction |

|---|---|---|---|

| ChatGPT | ~1,000 tokens | ~470 tokens | -53% |

| Claude | ~1,200 tokens | ~600 tokens | -50% |

| Gemini | ~900 tokens | ~450 tokens | -50% |

In short: half the tokens, half the cost.

The ripple effects went beyond price.

Latency dropped by roughly 15%, and debugging became much easier because TOON was clean and readable by humans and machines alike.

Why It Works

Language models like ChatGPT and Claude tokenize every symbol meaning every character in JSON (brackets, commas, quotes) adds to the bill.

TOON removes this noise while keeping structure intact, allowing LLMs to focus purely on meaning rather than markup.

By optimizing how data is represented, TOON turns verbose machine syntax into efficient model language.

It’s not compression, it’s intelligent simplification.

You can try it right now with our free converter:

👉 Convert JSON to TOON

How We Integrated TOON at Scalevise

We rolled out TOON across internal Make.com and n8n workflows, as well as our Node.js services that interact with ChatGPT and Claude APIs. Every structured payload was converted automatically before requests were sent.

Within a week, our analytics dashboards confirmed what we hoped:

API usage costs dropped by 52%, with no reduction in quality, accuracy, or stability.

Developers on our team also found TOON easier to read and modify, which improved collaboration and debugging during automation design.

The Business Impact

For large-scale operations using AI agents or workflow automations, every token reduction compounds over time. A 50% reduction in token size means you can double throughput at the same budget, or cut costs in half without touching model logic.

This shift turned into one of our most effective cost-savings initiatives this year.

It’s proof that efficiency in structure can be as impactful as model selection or hardware scaling.

Future of Data Formats for AI

JSON was built for web APIs not for large language models. As LLMs become integral to automation, content creation, and enterprise systems, data formats must evolve to serve both humans and models.

TOON is our first step toward that evolution. It aligns structure with meaning, letting AI interpret data more efficiently and companies save substantially on operational costs.

Let’s Optimize Your Data Format

If you manage high-volume AI or automation workflows and want to cut your token usage without rewriting your entire setup, we can help. Scalevise specializes in optimizing AI architectures, reducing model costs, and improving data efficiency through smarter formats like TOON.

Schedule a consultation and let’s cut your AI costs together.