TOON: The Smart, Lightweight JSON Alternative Powering AI and LLM Systems

Discover TOON: a faster, lighter, token-optimized alternative to JSON that improves efficiency in AI and LLM systems.

A New Language for AI Efficiency

Every major AI model from GPT to Claude runs on structured data.

Until now, JSON (JavaScript Object Notation) has been the universal format for that. It’s simple, flexible, and readable.

But as language models become larger and more integrated, JSON’s size and redundancy are becoming a bottleneck. The more tokens an AI has to process, the higher the cost and latency.

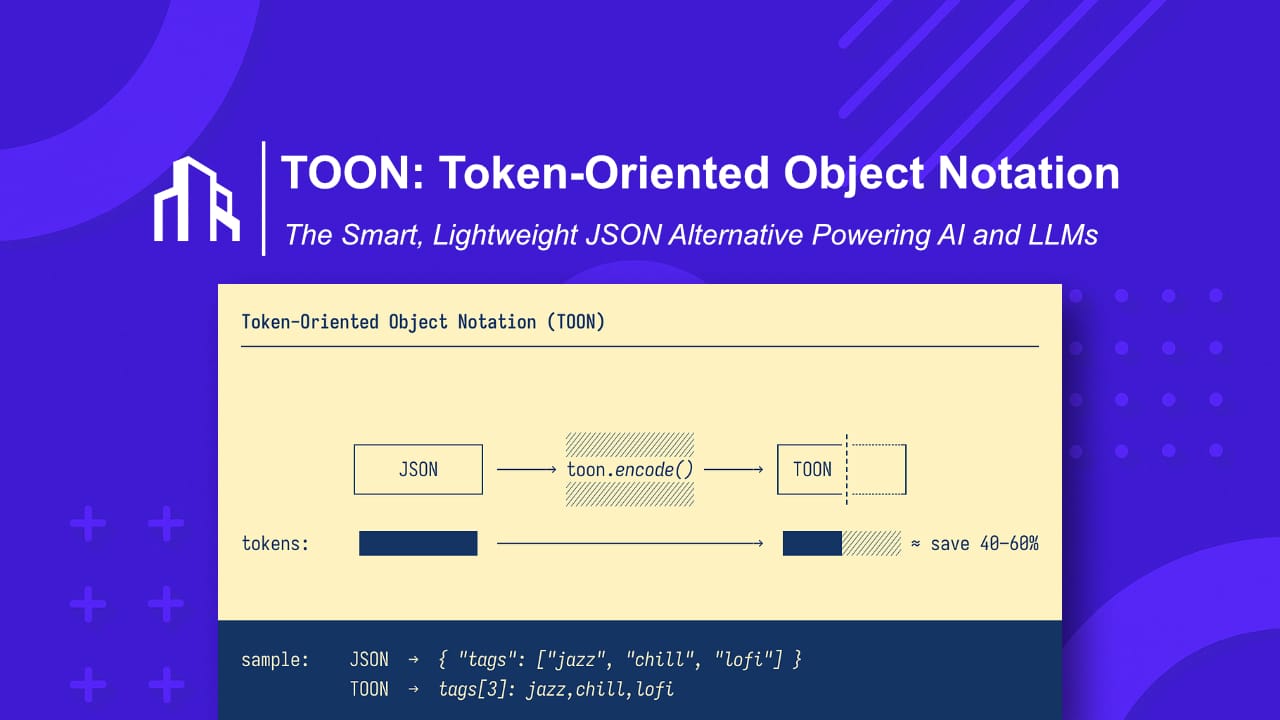

Enter TOON: Token-Oriented Object Notation.

A format designed to make AI communication faster, lighter, and more efficient.

Also See: How We Reduced API Costs by 50%

Example 1: Standard JSON vs TOON Structure

// JSON

{

"customer": {

"name": "Alice",

"order_id": 5829,

"items": ["notebook", "pen", "charger"],

"total_price": 79.50

}

}# TOON

@c{name:"Alice",oid:5829,it:["notebook","pen","charger"],tp:79.5}Example 2: Nested Object Compression

// JSON

{

"conversation": {

"sender": "user",

"message": "How can I automate my workflow?",

"timestamp": 1731242213

}

}# TOON

@cv{s:"user",m:"How can I automate my workflow?",t:1731242213}What Makes TOON Different

TOON keeps the same familiar structure as JSON objects, arrays, key-value pairs but it’s optimized for token usage, not human readability.

Instead of repeating long key names or unnecessary characters, TOON compresses data into token-friendly shorthand that models can parse and interpret more efficiently.

In simple terms:

- JSON is made for humans and machines.

- TOON is made purely for machines especially AI models.

The result is a smaller data footprint, lower API costs, and faster model responses.

Why It Matters Now

With millions of AI calls happening per second, efficiency is no longer a technical detail it’s an economic factor. Companies that interact with LLMs at scale are paying for every token processed.

A lighter format like TOON can reduce:

- Response latency — fewer characters mean faster processing

- Operational cost — fewer tokens mean lower API expenses

- Energy consumption — less data transmitted and stored

For AI infrastructure teams, it’s the equivalent of switching from diesel to electric: same purpose, different efficiency.

How It Changes AI Workflows

TOON is especially promising for AI systems that exchange structured data frequently think agents, workflows, and integrations.

For example:

- A sales AI agent that exchanges JSON payloads with CRMs could switch to TOON to halve its data usage.

- An automation workflow (built in Make.com or n8n) could use TOON to pass compact data between AI services.

- A custom LLM API could use TOON internally to store or transmit results faster without losing structure.

The format aligns perfectly with the trend toward token-aware engineering building AI pipelines that are not only accurate, but efficient.

When TOON Isn’t Ideal

TOON is not meant to replace JSON everywhere.

Its efficiency comes at a cost: it’s less human-readable.

For debugging, logging, or open-source collaboration, JSON remains the better choice.

TOON shines where performance and cost efficiency matter more than manual inspection in production, not development.

The Bigger Picture: A Leaner AI Ecosystem

The rise of TOON signals a broader shift in how we think about data for AI.

We’re moving from human-first formats to model-first formats.

As AI becomes part of every digital workflow, even small efficiency gains compound at scale. TOON represents that next step a data language optimized for machines, not humans.

It’s a small change in syntax, but a big leap in performance.

Why Scalevise Is Watching This Trend Closely

At Scalevise, we specialize in automation and AI architecture for fast-growing teams. Formats like TOON could soon redefine how data moves between your AI systems, APIs, and automations.

If you’re building LLM-driven workflows, every millisecond and token matters.

That’s why we’re already exploring how TOON can integrate into AI agents, workflow automation, and data pipelines to reduce overhead and improve scalability.

Want to make your AI infrastructure leaner?