Google NotebookLM Adds Video AI Features And Why This Changes Everything

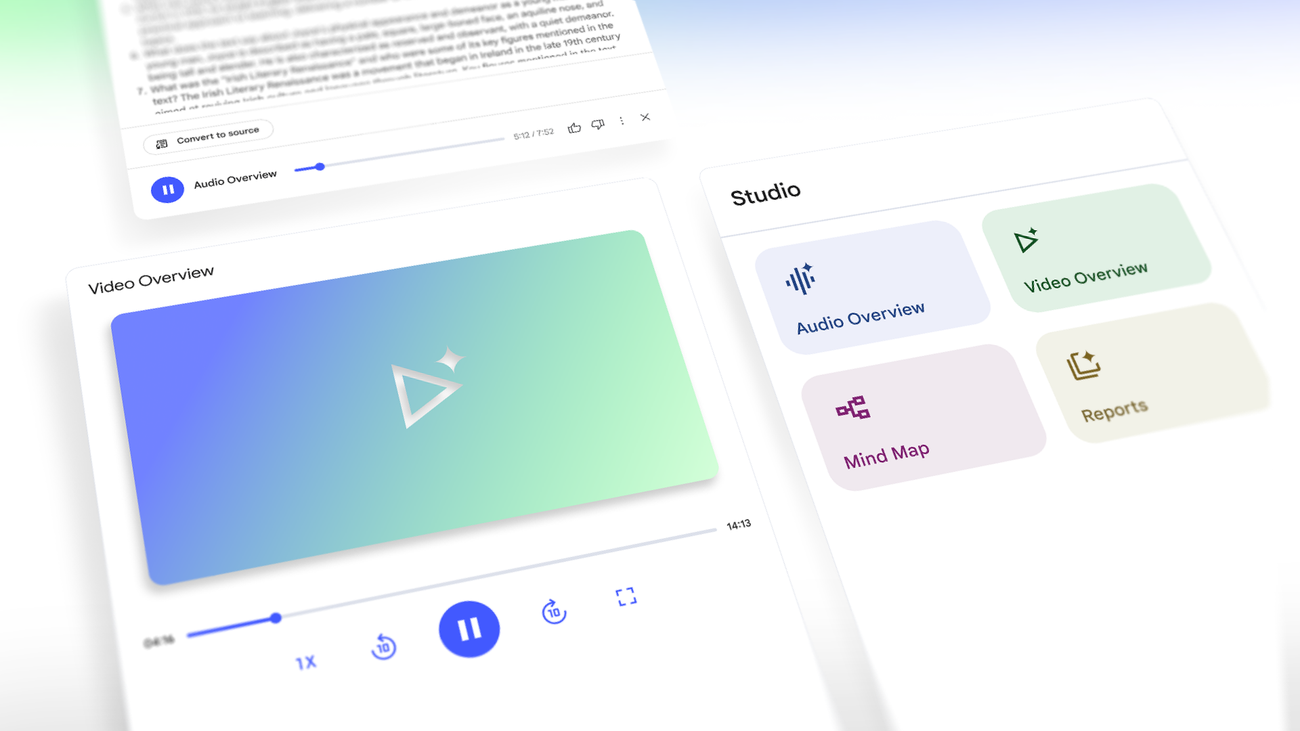

Google’s NotebookLM, already a powerful tool for summarizing and understanding documents, just took a major leap forward. With its latest update, NotebookLM now supports video-based knowledge extraction. Instead of uploading only text-based content, users can now feed the system videos, and the AI will extract key insights, generate summaries, and let users ask questions based on that content.

This update moves NotebookLM closer to becoming a true universal research assistant one that doesn’t just read documents but understands multimedia.

What Does the New Video Feature Do?

With this update, you can now:

- Upload or reference video content (via transcripts or possibly even URLs)

- Let NotebookLM generate summaries from those videos

- Ask questions about specific parts of the video

- Combine video content with documents or PDFs for holistic analysis

This brings powerful applications for researchers, educators, legal professionals, and content marketers who often deal with long-form video and want fast insight.

Imagine dropping a 2-hour keynote or webinar into NotebookLM and getting an executive summary, highlights, and citations in seconds.

Why This Is Bigger Than You Think

Most AI tools today either specialize in text (like ChatGPT) or require custom setups (e.g. Whisper + LangChain). NotebookLM brings this together in a single consumer-facing interface.

This means:

- No setup required

- No manual transcript uploads (eventually)

- Clean UI with contextual follow-up questions

- Built directly into the Google ecosystem

This update aligns with the shift towards AI-first productivity where your notes, files, and now video become part of a searchable, context-aware knowledge space.

Also See: NotebookLM for Business: Turn Documents Into Decisions

What Types of Businesses Will Benefit?

This functionality opens the door for:

- Agencies summarizing client onboarding calls or workshops

- Education platforms converting lectures into study material

- HR departments analyzing recorded interviews

- Legal firms extracting insights from depositions or compliance videos

- Sales teams turning demo recordings into training summaries

If you combine this with automation platforms like Make.com or n8n, you can build scalable flows around knowledge extraction, documentation, and compliance.

Internal Use Case: How Scalevise Applies NotebookLM

At Scalevise, we’ve used NotebookLM to:

- Summarize video case studies from client feedback

- Combine transcripts with strategy docs for cross-analysis

- Answer internal product team questions based on demo footage

It’s not just a summarizer. It’s a context-aware research assistant across mediums.

Want to build something like this? Check our other resources:

External Signals: This Is Just the Beginning

Google is not alone. Companies like Mem and Rewind are also exploring personal knowledge spaces with multimodal inputs. Meanwhile, Microsoft’s Copilot continues to expand inside Office with similar ambitions.

NotebookLM’s competitive edge lies in:

- Prompt-based interaction

- Context stacking across files and media

- Free access under Google Labs (for now)

For a deeper industry view, check these updates:

Conclusion: Your Knowledge Stack Is Now Multimodal

AI is no longer just about text. With tools like NotebookLM, the ability to extract insight from video, voice, and visuals in one place changes how we work.

The next logical step? Combining AI agents, automations, and platforms like NotebookLM into your daily operations.

Want to explore how you can deploy AI across your internal content stack?

👉 Get in touch with Scalevise we’ll show you exactly how to start.