Persistent Memory in GPT-5: Security Blessing or Privacy Nightmare?

As AI evolves, so does its memory. But is that a feature or a red flag?

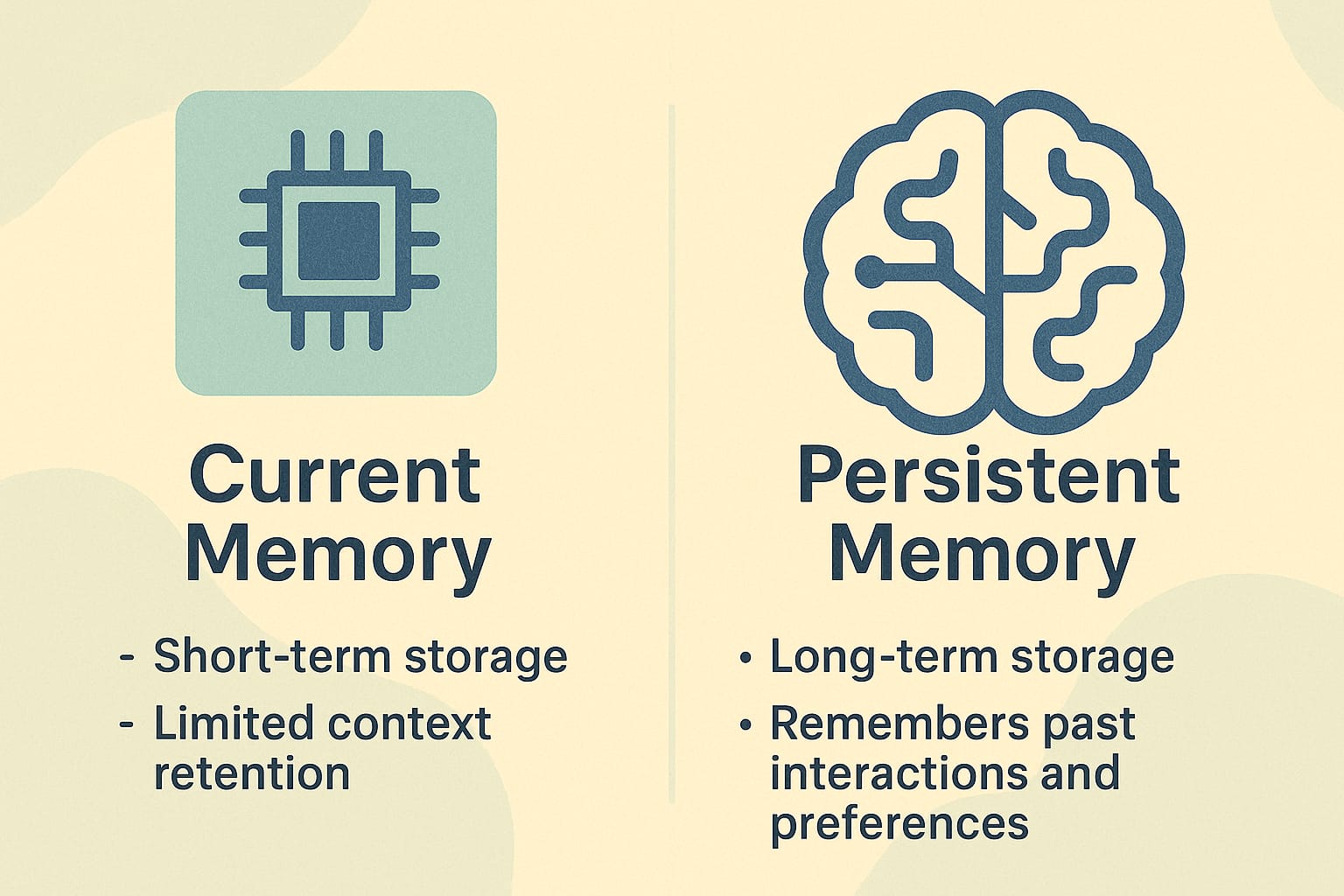

Persistent memory in GPT-5 marks a new era for AI interactions. It allows the model to retain information between sessions, remember user preferences, and offer more personalized responses over time. But with great memory comes great responsibility and serious concerns about security, privacy, and compliance.

What Is Persistent Memory in GPT-5?

GPT-5’s persistent memory means the AI doesn’t just respond in the moment. It can recall what you discussed yesterday, what projects you’re working on, and even your preferred tone of communication.

OpenAI claims this will improve long-term usability, enabling smoother workflows, ongoing assistant tasks, and personalized automation.

But this also means your data possibly sensitive or confidential might be stored and recalled indefinitely.

Benefits: Why Businesses Are Interested

- More contextual responses: Less need to re-explain tasks or preferences.

- Improved automation: Agents can handle ongoing tasks more intelligently.

- Efficient workflows: Continuity across sessions reduces friction.

It sounds like a dream for productivity. Until the security audit begins.

🐞 See Live GPT-5 Bug Tracker

The Privacy and Compliance Risks

While persistent memory sounds like an upgrade, the risks are significant especially under GDPR, HIPAA, and other global data protection regulations.

Key concerns:

- What data is stored?

If your AI remembers sales data, client names, or internal processes, this may qualify as PII. - Where is it stored?

Are you in control of the data's location and retention period? - Can it be deleted?

Users must be able to invoke their right to erasure does the memory feature support that? - Auditability

Can you trace what the AI remembers and when it used that data?

Real-World Example: A GDPR Conflict

A marketing team uses GPT-5 to help craft campaigns. Over time, the AI remembers previous promotions, customer segmentation data, and internal KPIs.

This memory is incredibly helpful but it also creates compliance gaps:

- No explicit consent was gathered.

- The data can’t be audited or deleted by default.

- The company has no idea what the AI retains in memory.

If an EU regulator investigates, that’s a serious liability.

How to Stay in Control

To harness the benefits of persistent memory without exposing your business to risk, you need safeguards.

✅ Use AI Middleware

A middleware layer between the user and GPT-5 can:

- Log all memory-related activity

- Offer opt-in/opt-out options for memory

- Store user consent

- Trigger compliance alerts

- Route memory data through secure, local storage

Read more about how Scalevise implements these layers:

https://scalevise.com/resources/gdpr-compliant-ai-middleware/

Checklist for Safe Use of Persistent Memory

- [ ] Enable opt-in memory features only with documented consent

- [ ] Log every memory access and recall

- [ ] Create internal policies for data retention and deletion

- [ ] Implement custom middleware for observability

- [ ] Educate your team on privacy risks

- [ ] Monitor for hallucinations and unintended recalls

Scalevise Can Help

At Scalevise, we help fast-scaling teams use AI safely and effectively. Our custom middleware solutions ensure persistent memory is not a liability but a strategic advantage.

We combine:

- Real-time logging

- Consent frameworks

- Compliance automations

- Agent-level memory controls

Contact us at: https://scalevise.com/contact